pacman::p_load("data.table", "dplyr", "tidyr",

"caret",

"ggplot2", "GGally")

titanic <- fread("../Titanic.csv") # 데이터 불러오기

titanic %>%

as_tibble1 Nearest Neighborhood Algorithm

Nearest Neighborhood Algorithm의 장점

- 알고리듬이 매우 간단하여 이해하기 쉽다.

- 관측된 데이터셋에 대해 분포를 가정할 필요가 없다.

- 훈련하는 동안 계산적 비용이 거의 없다.

Nearest Neighborhood Algorithm의 단점

- 범주형 예측 변수를 다룰 수 없다.

- Dummy 또는 One-hot Encoding 변환 후 사용할 수 있다.

- 데이터셋의 크기가 큰 경우, 새로운 Case와 다른 Case들과의 거리 계산에 시간이 많이 걸릴 수 있다.

- 이상치와 노이즈가 있는 경우, 예측에 큰 영향을 미친다.

- 고차원의 경우, 성능이 좋지 않다.

- 차원이 높아질수록 Case간 거리 차이가 거의 없는 것처럼 보여, 가장 가까운 거리를 찾는 것이 어렵다.

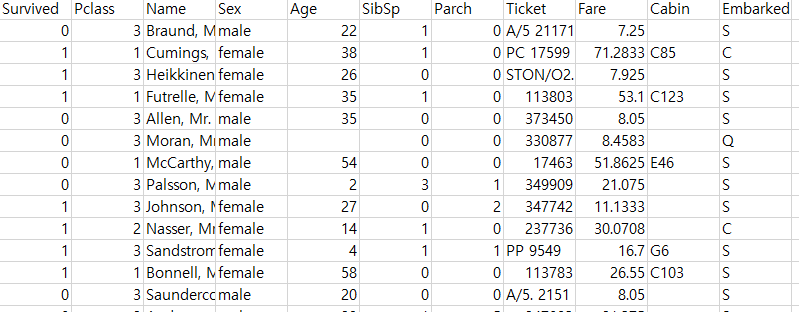

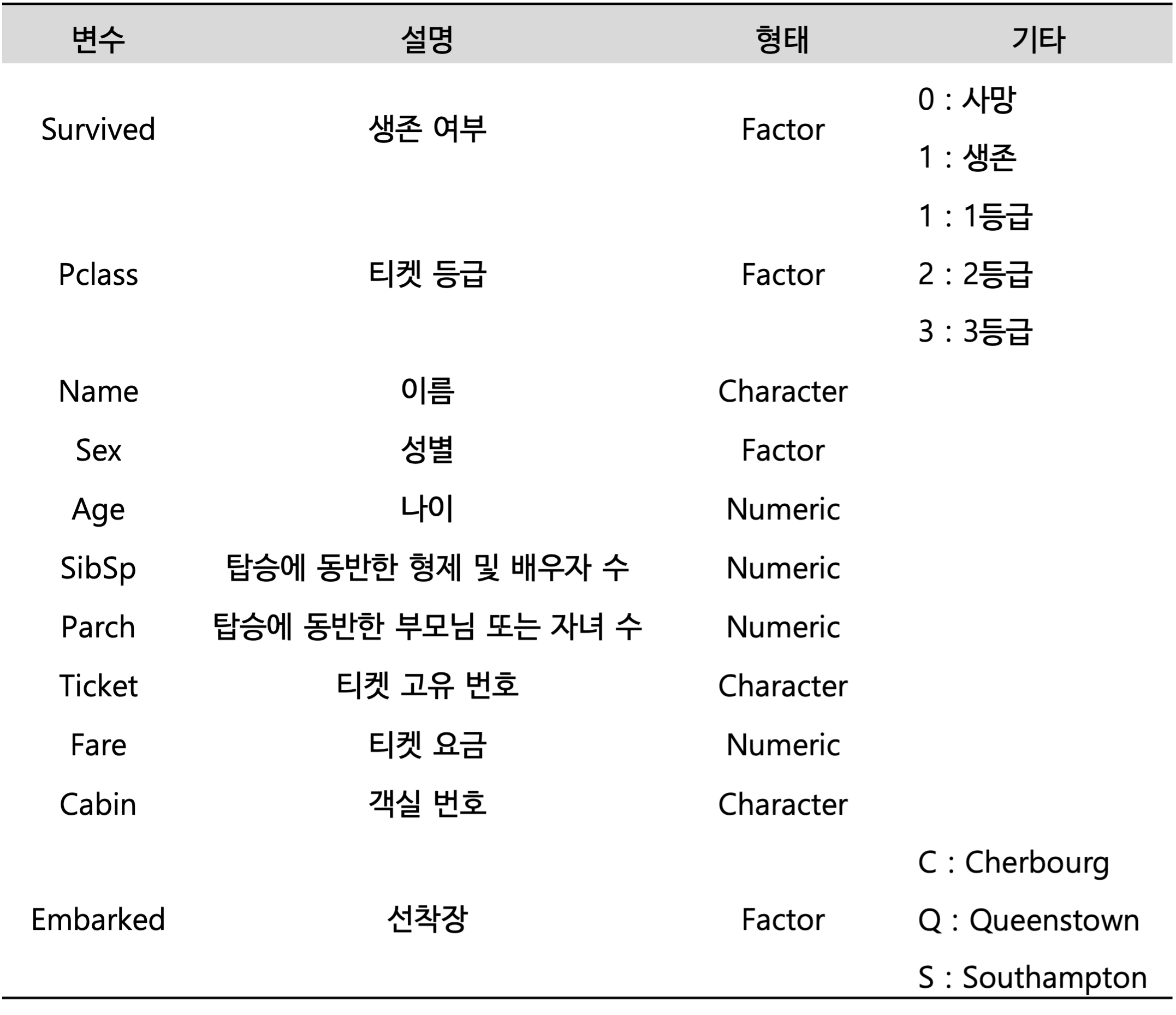

실습 자료 : 1912년 4월 15일 타이타닉호 침몰 당시 탑승객들의 정보를 기록한 데이터셋이며, 총 11개의 변수를 포함하고 있다. 이 자료에서 Target은

Survived이다.

1.1 데이터 불러오기

# A tibble: 891 × 11

Survived Pclass Name Sex Age SibSp Parch Ticket Fare Cabin Embarked

<int> <int> <chr> <chr> <dbl> <int> <int> <chr> <dbl> <chr> <chr>

1 0 3 Braund, Mr. Owen Harris male 22 1 0 A/5 21171 7.25 "" S

2 1 1 Cumings, Mrs. John Bradley (Florence Briggs Thayer) female 38 1 0 PC 17599 71.3 "C85" C

3 1 3 Heikkinen, Miss. Laina female 26 0 0 STON/O2. 3101282 7.92 "" S

4 1 1 Futrelle, Mrs. Jacques Heath (Lily May Peel) female 35 1 0 113803 53.1 "C123" S

5 0 3 Allen, Mr. William Henry male 35 0 0 373450 8.05 "" S

6 0 3 Moran, Mr. James male NA 0 0 330877 8.46 "" Q

7 0 1 McCarthy, Mr. Timothy J male 54 0 0 17463 51.9 "E46" S

8 0 3 Palsson, Master. Gosta Leonard male 2 3 1 349909 21.1 "" S

9 1 3 Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg) female 27 0 2 347742 11.1 "" S

10 1 2 Nasser, Mrs. Nicholas (Adele Achem) female 14 1 0 237736 30.1 "" C

# ℹ 881 more rows1.2 데이터 전처리 I

titanic %<>%

data.frame() %>% # Data Frame 형태로 변환

mutate(Survived = ifelse(Survived == 1, "yes", "no")) # Target을 문자형 변수로 변환

# 1. Convert to Factor

fac.col <- c("Pclass", "Sex",

# Target

"Survived")

titanic <- titanic %>%

mutate_at(fac.col, as.factor) # 범주형으로 변환

glimpse(titanic) # 데이터 구조 확인Rows: 891

Columns: 11

$ Survived <fct> no, yes, yes, yes, no, no, no, no, yes, yes, yes, yes, no, no, no, yes, no, yes, no, yes, no, yes, yes, yes, no, yes, no, no, yes, no, no, yes, yes, no, no, no, yes, no, no, yes, no…

$ Pclass <fct> 3, 1, 3, 1, 3, 3, 1, 3, 3, 2, 3, 1, 3, 3, 3, 2, 3, 2, 3, 3, 2, 2, 3, 1, 3, 3, 3, 1, 3, 3, 1, 1, 3, 2, 1, 1, 3, 3, 3, 3, 3, 2, 3, 2, 3, 3, 3, 3, 3, 3, 3, 3, 1, 2, 1, 1, 2, 3, 2, 3, 3…

$ Name <chr> "Braund, Mr. Owen Harris", "Cumings, Mrs. John Bradley (Florence Briggs Thayer)", "Heikkinen, Miss. Laina", "Futrelle, Mrs. Jacques Heath (Lily May Peel)", "Allen, Mr. William Henry…

$ Sex <fct> male, female, female, female, male, male, male, male, female, female, female, female, male, male, female, female, male, male, female, female, male, male, female, male, female, femal…

$ Age <dbl> 22.0, 38.0, 26.0, 35.0, 35.0, NA, 54.0, 2.0, 27.0, 14.0, 4.0, 58.0, 20.0, 39.0, 14.0, 55.0, 2.0, NA, 31.0, NA, 35.0, 34.0, 15.0, 28.0, 8.0, 38.0, NA, 19.0, NA, NA, 40.0, NA, NA, 66.…

$ SibSp <int> 1, 1, 0, 1, 0, 0, 0, 3, 0, 1, 1, 0, 0, 1, 0, 0, 4, 0, 1, 0, 0, 0, 0, 0, 3, 1, 0, 3, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 1, 1, 1, 0, 1, 0, 0, 1, 0, 2, 1, 4, 0, 1, 1, 0, 0, 0, 0, 1, 5, 0…

$ Parch <int> 0, 0, 0, 0, 0, 0, 0, 1, 2, 0, 1, 0, 0, 5, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 5, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 2, 2, 0…

$ Ticket <chr> "A/5 21171", "PC 17599", "STON/O2. 3101282", "113803", "373450", "330877", "17463", "349909", "347742", "237736", "PP 9549", "113783", "A/5. 2151", "347082", "350406", "248706", "38…

$ Fare <dbl> 7.2500, 71.2833, 7.9250, 53.1000, 8.0500, 8.4583, 51.8625, 21.0750, 11.1333, 30.0708, 16.7000, 26.5500, 8.0500, 31.2750, 7.8542, 16.0000, 29.1250, 13.0000, 18.0000, 7.2250, 26.0000,…

$ Cabin <chr> "", "C85", "", "C123", "", "", "E46", "", "", "", "G6", "C103", "", "", "", "", "", "", "", "", "", "D56", "", "A6", "", "", "", "C23 C25 C27", "", "", "", "B78", "", "", "", "", ""…

$ Embarked <chr> "S", "C", "S", "S", "S", "Q", "S", "S", "S", "C", "S", "S", "S", "S", "S", "S", "Q", "S", "S", "C", "S", "S", "Q", "S", "S", "S", "C", "S", "Q", "S", "C", "C", "Q", "S", "C", "S", "…# 2. Generate New Variable

titanic <- titanic %>%

mutate(FamSize = SibSp + Parch) # "FamSize = 형제 및 배우자 수 + 부모님 및 자녀 수"로 가족 수를 의미하는 새로운 변수

glimpse(titanic) # 데이터 구조 확인Rows: 891

Columns: 12

$ Survived <fct> no, yes, yes, yes, no, no, no, no, yes, yes, yes, yes, no, no, no, yes, no, yes, no, yes, no, yes, yes, yes, no, yes, no, no, yes, no, no, yes, yes, no, no, no, yes, no, no, yes, no…

$ Pclass <fct> 3, 1, 3, 1, 3, 3, 1, 3, 3, 2, 3, 1, 3, 3, 3, 2, 3, 2, 3, 3, 2, 2, 3, 1, 3, 3, 3, 1, 3, 3, 1, 1, 3, 2, 1, 1, 3, 3, 3, 3, 3, 2, 3, 2, 3, 3, 3, 3, 3, 3, 3, 3, 1, 2, 1, 1, 2, 3, 2, 3, 3…

$ Name <chr> "Braund, Mr. Owen Harris", "Cumings, Mrs. John Bradley (Florence Briggs Thayer)", "Heikkinen, Miss. Laina", "Futrelle, Mrs. Jacques Heath (Lily May Peel)", "Allen, Mr. William Henry…

$ Sex <fct> male, female, female, female, male, male, male, male, female, female, female, female, male, male, female, female, male, male, female, female, male, male, female, male, female, femal…

$ Age <dbl> 22.0, 38.0, 26.0, 35.0, 35.0, NA, 54.0, 2.0, 27.0, 14.0, 4.0, 58.0, 20.0, 39.0, 14.0, 55.0, 2.0, NA, 31.0, NA, 35.0, 34.0, 15.0, 28.0, 8.0, 38.0, NA, 19.0, NA, NA, 40.0, NA, NA, 66.…

$ SibSp <int> 1, 1, 0, 1, 0, 0, 0, 3, 0, 1, 1, 0, 0, 1, 0, 0, 4, 0, 1, 0, 0, 0, 0, 0, 3, 1, 0, 3, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 1, 1, 1, 0, 1, 0, 0, 1, 0, 2, 1, 4, 0, 1, 1, 0, 0, 0, 0, 1, 5, 0…

$ Parch <int> 0, 0, 0, 0, 0, 0, 0, 1, 2, 0, 1, 0, 0, 5, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 5, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 2, 2, 0…

$ Ticket <chr> "A/5 21171", "PC 17599", "STON/O2. 3101282", "113803", "373450", "330877", "17463", "349909", "347742", "237736", "PP 9549", "113783", "A/5. 2151", "347082", "350406", "248706", "38…

$ Fare <dbl> 7.2500, 71.2833, 7.9250, 53.1000, 8.0500, 8.4583, 51.8625, 21.0750, 11.1333, 30.0708, 16.7000, 26.5500, 8.0500, 31.2750, 7.8542, 16.0000, 29.1250, 13.0000, 18.0000, 7.2250, 26.0000,…

$ Cabin <chr> "", "C85", "", "C123", "", "", "E46", "", "", "", "G6", "C103", "", "", "", "", "", "", "", "", "", "D56", "", "A6", "", "", "", "C23 C25 C27", "", "", "", "B78", "", "", "", "", ""…

$ Embarked <chr> "S", "C", "S", "S", "S", "Q", "S", "S", "S", "C", "S", "S", "S", "S", "S", "S", "Q", "S", "S", "C", "S", "S", "Q", "S", "S", "S", "C", "S", "Q", "S", "C", "C", "Q", "S", "C", "S", "…

$ FamSize <int> 1, 1, 0, 1, 0, 0, 0, 4, 2, 1, 2, 0, 0, 6, 0, 0, 5, 0, 1, 0, 0, 0, 0, 0, 4, 6, 0, 5, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 1, 1, 1, 0, 3, 0, 0, 1, 0, 2, 1, 5, 0, 1, 1, 1, 0, 0, 0, 3, 7, 0…# 3. Select Variables used for Analysis

titanic1 <- titanic %>%

select(Survived, Pclass, Sex, Age, Fare, FamSize) # 분석에 사용할 변수 선택

# 4. Convert One-hot Encoding for 범주형 예측 변수

dummies <- dummyVars(formula = ~ ., # formula : ~ 예측 변수 / "." : data에 포함된 모든 변수를 의미

data = titanic1[,-1], # Dataset including Only 예측 변수 -> Target 제외

fullRank = FALSE) # fullRank = TRUE : Dummy Variable, fullRank = FALSE : One-hot Encoding

titanic.Var <- predict(dummies, newdata = titanic1) %>% # 범주형 예측 변수에 대한 One-hot Encoding 변환

data.frame() # Data Frame 형태로 변환

glimpse(titanic.Var) # 데이터 구조 확인Rows: 891

Columns: 8

$ Pclass.1 <dbl> 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0,…

$ Pclass.2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0,…

$ Pclass.3 <dbl> 1, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1,…

$ Sex.female <dbl> 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0,…

$ Sex.male <dbl> 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 1,…

$ Age <dbl> 22.0, 38.0, 26.0, 35.0, 35.0, NA, 54.0, 2.0, 27.0, 14.0, 4.0, 58.0, 20.0, 39.0, 14.0, 55.0, 2.0, NA, 31.0, NA, 35.0, 34.0, 15.0, 28.0, 8.0, 38.0, NA, 19.0, NA, NA, 40.0, NA, NA, 6…

$ Fare <dbl> 7.2500, 71.2833, 7.9250, 53.1000, 8.0500, 8.4583, 51.8625, 21.0750, 11.1333, 30.0708, 16.7000, 26.5500, 8.0500, 31.2750, 7.8542, 16.0000, 29.1250, 13.0000, 18.0000, 7.2250, 26.000…

$ FamSize <dbl> 1, 1, 0, 1, 0, 0, 0, 4, 2, 1, 2, 0, 0, 6, 0, 0, 5, 0, 1, 0, 0, 0, 0, 0, 4, 6, 0, 5, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 1, 1, 1, 0, 3, 0, 0, 1, 0, 2, 1, 5, 0, 1, 1, 1, 0, 0, 0, 3, 7,…# Combine Target with 변환된 예측 변수

titanic.df <- data.frame(Survived = titanic1$Survived,

titanic.Var)

titanic.df %>%

as_tibble# A tibble: 891 × 9

Survived Pclass.1 Pclass.2 Pclass.3 Sex.female Sex.male Age Fare FamSize

<fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 no 0 0 1 0 1 22 7.25 1

2 yes 1 0 0 1 0 38 71.3 1

3 yes 0 0 1 1 0 26 7.92 0

4 yes 1 0 0 1 0 35 53.1 1

5 no 0 0 1 0 1 35 8.05 0

6 no 0 0 1 0 1 NA 8.46 0

7 no 1 0 0 0 1 54 51.9 0

8 no 0 0 1 0 1 2 21.1 4

9 yes 0 0 1 1 0 27 11.1 2

10 yes 0 1 0 1 0 14 30.1 1

# ℹ 881 more rowsglimpse(titanic.df) # 데이터 구조 확인Rows: 891

Columns: 9

$ Survived <fct> no, yes, yes, yes, no, no, no, no, yes, yes, yes, yes, no, no, no, yes, no, yes, no, yes, no, yes, yes, yes, no, yes, no, no, yes, no, no, yes, yes, no, no, no, yes, no, no, yes, …

$ Pclass.1 <dbl> 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0,…

$ Pclass.2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0,…

$ Pclass.3 <dbl> 1, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1,…

$ Sex.female <dbl> 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0,…

$ Sex.male <dbl> 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 1,…

$ Age <dbl> 22.0, 38.0, 26.0, 35.0, 35.0, NA, 54.0, 2.0, 27.0, 14.0, 4.0, 58.0, 20.0, 39.0, 14.0, 55.0, 2.0, NA, 31.0, NA, 35.0, 34.0, 15.0, 28.0, 8.0, 38.0, NA, 19.0, NA, NA, 40.0, NA, NA, 6…

$ Fare <dbl> 7.2500, 71.2833, 7.9250, 53.1000, 8.0500, 8.4583, 51.8625, 21.0750, 11.1333, 30.0708, 16.7000, 26.5500, 8.0500, 31.2750, 7.8542, 16.0000, 29.1250, 13.0000, 18.0000, 7.2250, 26.000…

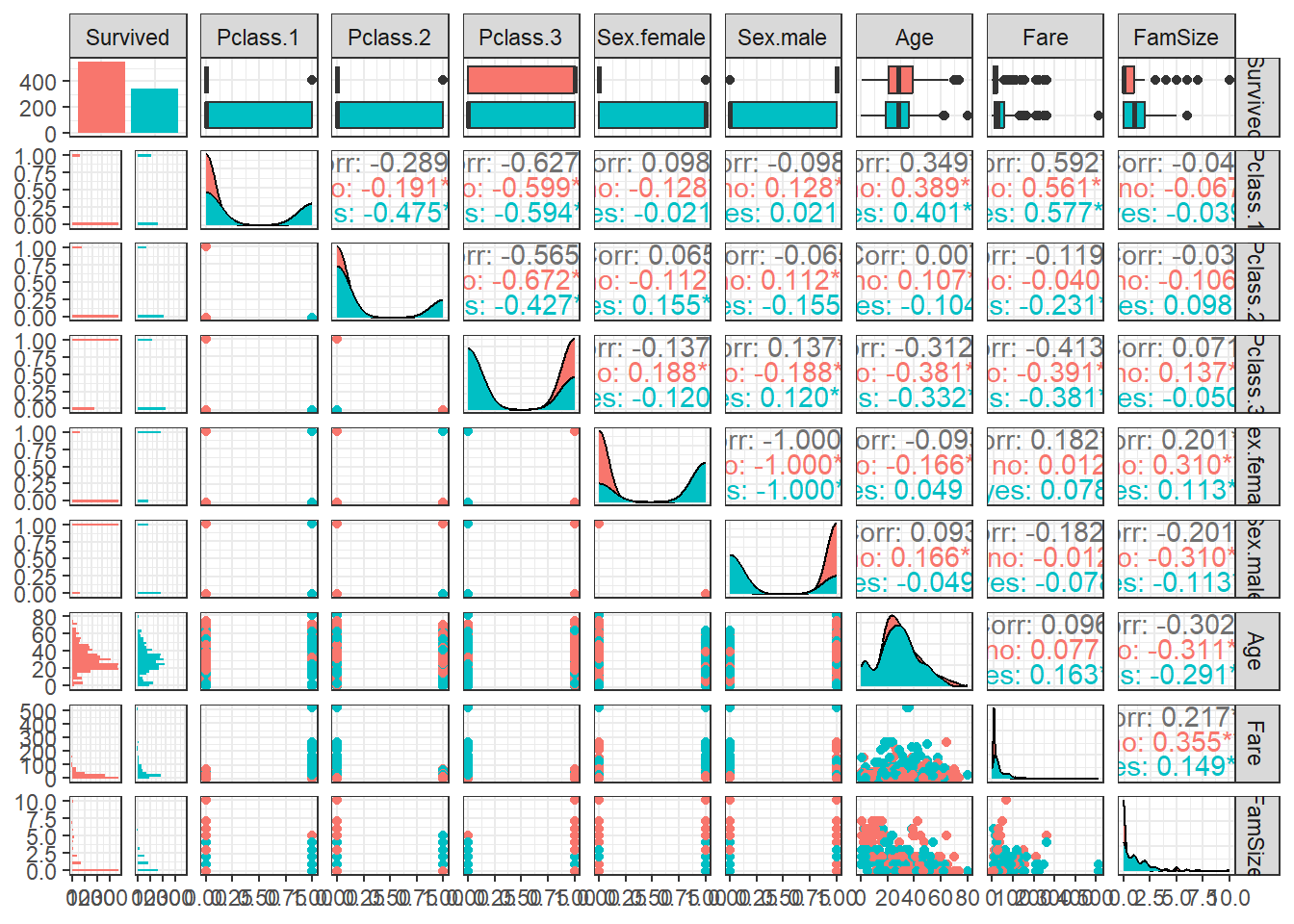

$ FamSize <dbl> 1, 1, 0, 1, 0, 0, 0, 4, 2, 1, 2, 0, 0, 6, 0, 0, 5, 0, 1, 0, 0, 0, 0, 0, 4, 6, 0, 5, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 2, 1, 1, 1, 0, 3, 0, 0, 1, 0, 2, 1, 5, 0, 1, 1, 1, 0, 0, 0, 3, 7,…1.3 데이터 탐색

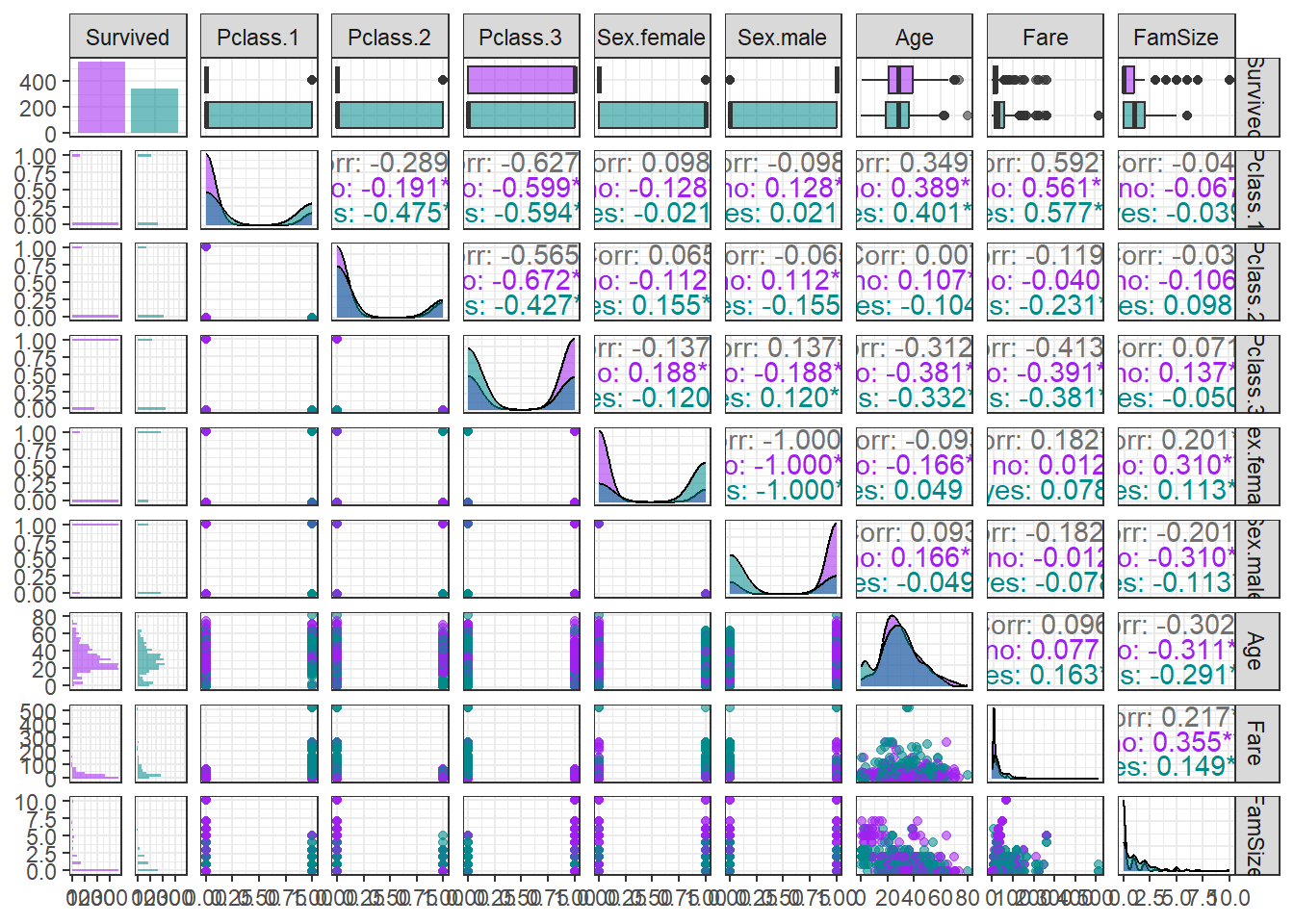

ggpairs(titanic.df,

aes(colour = Survived)) + # Target의 범주에 따라 색깔을 다르게 표현

theme_bw()

ggpairs(titanic.df,

aes(colour = Survived, alpha = 0.8)) + # Target의 범주에 따라 색깔을 다르게 표현

scale_colour_manual(values = c("purple","cyan4")) + # 특정 색깔 지정

scale_fill_manual(values = c("purple","cyan4")) + # 특정 색깔 지정

theme_bw()

1.4 데이터 분할

# Partition (Training Dataset : Test Dataset = 7:3)

y <- titanic.df$Survived # Target

set.seed(200)

ind <- createDataPartition(y, p = 0.7, list =T) # Index를 이용하여 7:3으로 분할

titanic.trd <- titanic.df[ind$Resample1,] # Training Dataset

titanic.ted <- titanic.df[-ind$Resample1,] # Test Dataset1.5 데이터 전처리 II

# 1. Imputation

titanic.trd.Imp <- titanic.trd %>%

mutate(Age = replace_na(Age, mean(Age, na.rm = TRUE))) # 평균으로 결측값 대체

titanic.ted.Imp <- titanic.ted %>%

mutate(Age = replace_na(Age, mean(titanic.trd$Age, na.rm = TRUE))) # Training Dataset을 이용하여 결측값 대체

# 2. Standardization

preProcValues <- preProcess(titanic.trd.Imp,

method = c("center", "scale")) # Standardization 정의 -> Training Dataset에 대한 평균과 표준편차 계산

titanic.trd.Imp <- predict(preProcValues, titanic.trd.Imp) # Standardization for Training Dataset

titanic.ted.Imp <- predict(preProcValues, titanic.ted.Imp) # Standardization for Test Dataset

glimpse(titanic.trd.Imp) # 데이터 구조 확인Rows: 625

Columns: 9

$ Survived <fct> no, yes, yes, no, no, no, yes, yes, yes, yes, no, no, yes, no, yes, no, yes, no, no, no, yes, no, no, yes, yes, no, no, no, no, no, yes, no, no, no, yes, no, yes, no, no, no, yes,…

$ Pclass.1 <dbl> -0.593506, -0.593506, 1.682207, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, 1.682207, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, 1.682…

$ Pclass.2 <dbl> -0.4694145, -0.4694145, -0.4694145, -0.4694145, -0.4694145, -0.4694145, -0.4694145, 2.1269048, -0.4694145, -0.4694145, -0.4694145, -0.4694145, 2.1269048, -0.4694145, -0.4694145, 2…

$ Pclass.3 <dbl> 0.888575, 0.888575, -1.123597, 0.888575, 0.888575, 0.888575, 0.888575, -1.123597, 0.888575, -1.123597, 0.888575, 0.888575, -1.123597, 0.888575, 0.888575, -1.123597, -1.123597, 0.8…

$ Sex.female <dbl> -0.7572241, 1.3184999, 1.3184999, -0.7572241, -0.7572241, -0.7572241, 1.3184999, 1.3184999, 1.3184999, 1.3184999, -0.7572241, 1.3184999, -0.7572241, 1.3184999, 1.3184999, -0.75722…

$ Sex.male <dbl> 0.7572241, -1.3184999, -1.3184999, 0.7572241, 0.7572241, 0.7572241, -1.3184999, -1.3184999, -1.3184999, -1.3184999, 0.7572241, -1.3184999, 0.7572241, -1.3184999, -1.3184999, 0.757…

$ Age <dbl> -0.61306970, -0.30411628, 0.39102893, 0.39102893, 0.00000000, -2.15783684, -0.22687792, -1.23097656, -2.00336012, 2.16751113, 0.69998236, -1.23097656, 0.00000000, 0.08207551, 0.00…

$ Fare <dbl> -0.51776394, -0.50463325, 0.37414970, -0.50220165, -0.49425904, -0.24882814, -0.44222264, -0.07383411, -0.33393441, -0.14232374, -0.05040897, -0.50601052, -0.40590999, -0.30864569…

$ FamSize <dbl> 0.04506631, -0.55421976, 0.04506631, -0.55421976, -0.55421976, 1.84292454, 0.64435239, 0.04506631, 0.64435239, -0.55421976, 3.04149669, -0.55421976, -0.55421976, 0.04506631, -0.55…glimpse(titanic.ted.Imp) # 데이터 구조 확인Rows: 266

Columns: 9

$ Survived <fct> yes, no, no, yes, no, yes, yes, yes, yes, yes, no, no, yes, yes, no, yes, no, yes, yes, no, yes, no, no, no, no, no, no, yes, yes, no, no, no, no, no, no, no, no, no, no, yes, no,…

$ Pclass.1 <dbl> 1.682207, 1.682207, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, -0.593506, 1.682…

$ Pclass.2 <dbl> -0.4694145, -0.4694145, -0.4694145, 2.1269048, -0.4694145, 2.1269048, -0.4694145, -0.4694145, -0.4694145, 2.1269048, -0.4694145, -0.4694145, 2.1269048, 2.1269048, -0.4694145, 2.12…

$ Pclass.3 <dbl> -1.123597, -1.123597, 0.888575, -1.123597, 0.888575, -1.123597, 0.888575, 0.888575, 0.888575, -1.123597, 0.888575, 0.888575, -1.123597, -1.123597, 0.888575, -1.123597, -1.123597, …

$ Sex.female <dbl> 1.3184999, -0.7572241, -0.7572241, 1.3184999, -0.7572241, -0.7572241, 1.3184999, 1.3184999, -0.7572241, 1.3184999, -0.7572241, -0.7572241, 1.3184999, 1.3184999, -0.7572241, 1.3184…

$ Sex.male <dbl> -1.3184999, 0.7572241, 0.7572241, -1.3184999, 0.7572241, 0.7572241, -1.3184999, -1.3184999, 0.7572241, -1.3184999, 0.7572241, 0.7572241, -1.3184999, -1.3184999, 0.7572241, -1.3184…

$ Age <dbl> 0.62274400, 1.85855771, -0.76754642, 1.93579607, -2.15783684, 0.31379058, -1.15373820, 0.62274400, 0.00000000, -2.08059848, 0.00000000, -0.69030806, -0.07240121, -0.69030806, -0.1…

$ Fare <dbl> 0.727866891, 0.350076786, -0.502201647, -0.347551409, -0.092232621, -0.405909990, -0.502606266, -0.048220525, -0.518168555, 0.150037190, -0.502201647, -0.507064862, -0.153022808, …

$ FamSize <dbl> 0.04506631, -0.55421976, -0.55421976, -0.55421976, 2.44221062, -0.55421976, -0.55421976, 3.04149669, -0.55421976, 1.24363847, -0.55421976, -0.55421976, 0.04506631, -0.55421976, -0…1.6 모형 훈련

Nearest Neighborhood Algorithm은 다양한 Package(예를 들어, "caret", "class")를 통해 수행할 수 있다. Package "class"의 함수 knn()를 이용하면 특정 class에 대한 예측 확률만 얻을 수 있는 반면, Package "caret"의 함수 knn3()를 이용하면 각 class에 대한 예측 확률을 얻을 수 있다. 그래서 여기서는 Package "caret"을 이용하여 모형 훈련을 수행한다.

knn.model <- knn3(Survived ~ ., # Target ~ 예측 변수

data = titanic.trd.Imp, # Training Dataset

k = 4) # 이웃 개수

knn.model4-nearest neighbor model

Training set outcome distribution:

no yes

385 240 Caution! Package "caret"에서 제공하는 함수 knn3Train()를 이용하면 Training Dataset에 대한 모형 훈련과 Test Dataset에 대한 예측을 한 번에 수행할 수 있다.

# 모형 훈련 & 예측 한꺼번에

knn3Train(titanic.trd.Imp[, -1], # Training Dataset including Only 예측 변수

titanic.ted.Imp[, -1], # Test Dataset including Only 예측 변수

cl = titanic.trd.Imp[, 1], # Target of Training Dataset

k = 4) # 이웃 개수 [1] "yes" "no" "no" "yes" "no" "no" "yes" "no" "no" "yes" "no" "no" "yes" "yes" "no" "yes" "no" "no" "yes" "no" "no" "no" "no" "no" "no" "no" "no" "no" "yes" "no" "yes" "no"

[33] "no" "no" "no" "no" "no" "no" "no" "yes" "no" "no" "no" "yes" "no" "no" "no" "no" "yes" "no" "no" "no" "no" "yes" "no" "yes" "no" "no" "no" "yes" "no" "no" "yes" "no"

[65] "yes" "no" "no" "yes" "no" "no" "no" "no" "yes" "yes" "no" "no" "yes" "no" "no" "no" "yes" "no" "no" "no" "no" "no" "yes" "no" "no" "no" "no" "no" "no" "yes" "yes" "yes"

[97] "yes" "yes" "no" "no" "no" "yes" "yes" "yes" "no" "yes" "no" "yes" "no" "yes" "yes" "no" "yes" "yes" "no" "no" "yes" "no" "no" "no" "yes" "no" "no" "yes" "yes" "yes" "no" "yes"

[129] "yes" "yes" "no" "yes" "no" "no" "yes" "yes" "no" "no" "no" "no" "yes" "no" "no" "no" "yes" "no" "no" "no" "no" "no" "no" "no" "no" "no" "no" "yes" "no" "yes" "no" "no"

[161] "yes" "no" "yes" "yes" "no" "yes" "yes" "no" "no" "no" "yes" "no" "yes" "yes" "yes" "no" "yes" "no" "no" "no" "no" "no" "no" "yes" "no" "no" "no" "yes" "no" "yes" "no" "no"

[193] "yes" "no" "no" "no" "no" "yes" "no" "no" "no" "no" "no" "no" "no" "no" "no" "no" "yes" "no" "no" "yes" "yes" "no" "no" "no" "yes" "yes" "no" "no" "yes" "no" "no" "yes"

[225] "yes" "yes" "no" "no" "no" "no" "yes" "no" "no" "no" "yes" "no" "no" "yes" "yes" "no" "no" "yes" "no" "no" "no" "yes" "no" "yes" "no" "no" "yes" "no" "no" "no" "no" "yes"

[257] "yes" "yes" "no" "yes" "no" "yes" "yes" "no" "yes" "yes"

attr(,"prob")

no yes

[1,] 0.0000000 1.00000000

[2,] 0.7500000 0.25000000

[3,] 0.7500000 0.25000000

[4,] 0.5000000 0.50000000

[5,] 0.7500000 0.25000000

[6,] 1.0000000 0.00000000

[7,] 0.2500000 0.75000000

[8,] 1.0000000 0.00000000

[9,] 1.0000000 0.00000000

[10,] 0.0000000 1.00000000

[11,] 1.0000000 0.00000000

[12,] 0.8000000 0.20000000

[13,] 0.2500000 0.75000000

[14,] 0.2500000 0.75000000

[15,] 0.7500000 0.25000000

[16,] 0.0000000 1.00000000

[17,] 0.5000000 0.50000000

[18,] 0.5000000 0.50000000

[19,] 0.0000000 1.00000000

[20,] 1.0000000 0.00000000

[21,] 1.0000000 0.00000000

[22,] 0.8571429 0.14285714

[23,] 1.0000000 0.00000000

[24,] 1.0000000 0.00000000

[25,] 1.0000000 0.00000000

[26,] 1.0000000 0.00000000

[27,] 0.7500000 0.25000000

[28,] 0.5000000 0.50000000

[29,] 0.2500000 0.75000000

[30,] 0.7500000 0.25000000

[31,] 0.0000000 1.00000000

[32,] 1.0000000 0.00000000

[33,] 1.0000000 0.00000000

[34,] 0.8000000 0.20000000

[35,] 0.7500000 0.25000000

[36,] 0.7500000 0.25000000

[37,] 1.0000000 0.00000000

[38,] 1.0000000 0.00000000

[39,] 0.7500000 0.25000000

[40,] 0.0000000 1.00000000

[41,] 1.0000000 0.00000000

[42,] 0.7500000 0.25000000

[43,] 1.0000000 0.00000000

[44,] 0.0000000 1.00000000

[45,] 1.0000000 0.00000000

[46,] 0.5000000 0.50000000

[47,] 1.0000000 0.00000000

[48,] 0.7500000 0.25000000

[49,] 0.0000000 1.00000000

[50,] 1.0000000 0.00000000

[51,] 1.0000000 0.00000000

[52,] 0.7500000 0.25000000

[53,] 0.7500000 0.25000000

[54,] 0.2500000 0.75000000

[55,] 1.0000000 0.00000000

[56,] 0.2500000 0.75000000

[57,] 1.0000000 0.00000000

[58,] 0.7500000 0.25000000

[59,] 0.7500000 0.25000000

[60,] 0.0000000 1.00000000

[61,] 0.7500000 0.25000000

[62,] 1.0000000 0.00000000

[63,] 0.0000000 1.00000000

[64,] 0.7500000 0.25000000

[65,] 0.0000000 1.00000000

[66,] 0.7500000 0.25000000

[67,] 0.7500000 0.25000000

[68,] 0.2500000 0.75000000

[69,] 1.0000000 0.00000000

[70,] 1.0000000 0.00000000

[71,] 1.0000000 0.00000000

[72,] 1.0000000 0.00000000

[73,] 0.5000000 0.50000000

[74,] 0.0000000 1.00000000

[75,] 1.0000000 0.00000000

[76,] 0.7500000 0.25000000

[77,] 0.2000000 0.80000000

[78,] 1.0000000 0.00000000

[79,] 1.0000000 0.00000000

[80,] 0.7500000 0.25000000

[81,] 0.2500000 0.75000000

[82,] 1.0000000 0.00000000

[83,] 0.7500000 0.25000000

[84,] 1.0000000 0.00000000

[85,] 0.5000000 0.50000000

[86,] 1.0000000 0.00000000

[87,] 0.5000000 0.50000000

[88,] 0.7500000 0.25000000

[89,] 1.0000000 0.00000000

[90,] 0.8000000 0.20000000

[91,] 1.0000000 0.00000000

[92,] 1.0000000 0.00000000

[93,] 0.7500000 0.25000000

[94,] 0.2000000 0.80000000

[95,] 0.0000000 1.00000000

[96,] 0.0000000 1.00000000

[97,] 0.0000000 1.00000000

[98,] 0.0000000 1.00000000

[99,] 0.8000000 0.20000000

[100,] 1.0000000 0.00000000

[101,] 1.0000000 0.00000000

[102,] 0.0000000 1.00000000

[103,] 0.0000000 1.00000000

[104,] 0.5000000 0.50000000

[105,] 1.0000000 0.00000000

[106,] 0.0000000 1.00000000

[107,] 1.0000000 0.00000000

[108,] 0.0000000 1.00000000

[109,] 1.0000000 0.00000000

[110,] 0.0000000 1.00000000

[111,] 0.0000000 1.00000000

[112,] 1.0000000 0.00000000

[113,] 0.2000000 0.80000000

[114,] 0.4000000 0.60000000

[115,] 1.0000000 0.00000000

[116,] 1.0000000 0.00000000

[117,] 0.0000000 1.00000000

[118,] 0.5000000 0.50000000

[119,] 0.8000000 0.20000000

[120,] 1.0000000 0.00000000

[121,] 0.0000000 1.00000000

[122,] 0.7500000 0.25000000

[123,] 0.5000000 0.50000000

[124,] 0.0000000 1.00000000

[125,] 0.5000000 0.50000000

[126,] 0.5000000 0.50000000

[127,] 0.7500000 0.25000000

[128,] 0.2500000 0.75000000

[129,] 0.5000000 0.50000000

[130,] 0.5000000 0.50000000

[131,] 0.7500000 0.25000000

[132,] 0.2500000 0.75000000

[133,] 1.0000000 0.00000000

[134,] 1.0000000 0.00000000

[135,] 0.0000000 1.00000000

[136,] 0.0000000 1.00000000

[137,] 0.5000000 0.50000000

[138,] 1.0000000 0.00000000

[139,] 1.0000000 0.00000000

[140,] 1.0000000 0.00000000

[141,] 0.0000000 1.00000000

[142,] 1.0000000 0.00000000

[143,] 1.0000000 0.00000000

[144,] 1.0000000 0.00000000

[145,] 0.0000000 1.00000000

[146,] 1.0000000 0.00000000

[147,] 0.7500000 0.25000000

[148,] 1.0000000 0.00000000

[149,] 1.0000000 0.00000000

[150,] 1.0000000 0.00000000

[151,] 0.7500000 0.25000000

[152,] 0.5000000 0.50000000

[153,] 0.8000000 0.20000000

[154,] 1.0000000 0.00000000

[155,] 0.7500000 0.25000000

[156,] 0.2500000 0.75000000

[157,] 0.7500000 0.25000000

[158,] 0.5000000 0.50000000

[159,] 1.0000000 0.00000000

[160,] 1.0000000 0.00000000

[161,] 0.5000000 0.50000000

[162,] 0.7500000 0.25000000

[163,] 0.0000000 1.00000000

[164,] 0.2500000 0.75000000

[165,] 0.7500000 0.25000000

[166,] 0.0000000 1.00000000

[167,] 0.0000000 1.00000000

[168,] 0.5000000 0.50000000

[169,] 1.0000000 0.00000000

[170,] 0.7500000 0.25000000

[171,] 0.5000000 0.50000000

[172,] 1.0000000 0.00000000

[173,] 0.0000000 1.00000000

[174,] 0.2000000 0.80000000

[175,] 0.0000000 1.00000000

[176,] 1.0000000 0.00000000

[177,] 0.0000000 1.00000000

[178,] 1.0000000 0.00000000

[179,] 1.0000000 0.00000000

[180,] 1.0000000 0.00000000

[181,] 0.7500000 0.25000000

[182,] 1.0000000 0.00000000

[183,] 1.0000000 0.00000000

[184,] 0.0000000 1.00000000

[185,] 1.0000000 0.00000000

[186,] 1.0000000 0.00000000

[187,] 1.0000000 0.00000000

[188,] 0.0000000 1.00000000

[189,] 1.0000000 0.00000000

[190,] 0.0000000 1.00000000

[191,] 1.0000000 0.00000000

[192,] 1.0000000 0.00000000

[193,] 0.5000000 0.50000000

[194,] 1.0000000 0.00000000

[195,] 1.0000000 0.00000000

[196,] 1.0000000 0.00000000

[197,] 0.7500000 0.25000000

[198,] 0.5000000 0.50000000

[199,] 1.0000000 0.00000000

[200,] 1.0000000 0.00000000

[201,] 0.8571429 0.14285714

[202,] 0.5000000 0.50000000

[203,] 1.0000000 0.00000000

[204,] 1.0000000 0.00000000

[205,] 1.0000000 0.00000000

[206,] 1.0000000 0.00000000

[207,] 1.0000000 0.00000000

[208,] 0.7500000 0.25000000

[209,] 0.1666667 0.83333333

[210,] 1.0000000 0.00000000

[211,] 0.8000000 0.20000000

[212,] 0.2500000 0.75000000

[213,] 0.0000000 1.00000000

[214,] 1.0000000 0.00000000

[215,] 0.7500000 0.25000000

[216,] 1.0000000 0.00000000

[217,] 0.0000000 1.00000000

[218,] 0.0000000 1.00000000

[219,] 1.0000000 0.00000000

[220,] 1.0000000 0.00000000

[221,] 0.5000000 0.50000000

[222,] 0.7500000 0.25000000

[223,] 1.0000000 0.00000000

[224,] 0.0000000 1.00000000

[225,] 0.0000000 1.00000000

[226,] 0.0000000 1.00000000

[227,] 0.7500000 0.25000000

[228,] 0.7500000 0.25000000

[229,] 1.0000000 0.00000000

[230,] 1.0000000 0.00000000

[231,] 0.2500000 0.75000000

[232,] 0.7500000 0.25000000

[233,] 1.0000000 0.00000000

[234,] 1.0000000 0.00000000

[235,] 0.5000000 0.50000000

[236,] 1.0000000 0.00000000

[237,] 1.0000000 0.00000000

[238,] 0.0000000 1.00000000

[239,] 0.5000000 0.50000000

[240,] 0.6000000 0.40000000

[241,] 0.7500000 0.25000000

[242,] 0.0000000 1.00000000

[243,] 1.0000000 0.00000000

[244,] 0.7500000 0.25000000

[245,] 1.0000000 0.00000000

[246,] 0.0000000 1.00000000

[247,] 1.0000000 0.00000000

[248,] 0.0000000 1.00000000

[249,] 1.0000000 0.00000000

[250,] 1.0000000 0.00000000

[251,] 0.2500000 0.75000000

[252,] 0.7500000 0.25000000

[253,] 0.7500000 0.25000000

[254,] 1.0000000 0.00000000

[255,] 1.0000000 0.00000000

[256,] 0.0000000 1.00000000

[257,] 0.0000000 1.00000000

[258,] 0.0000000 1.00000000

[259,] 0.9166667 0.08333333

[260,] 0.0000000 1.00000000

[261,] 0.7500000 0.25000000

[262,] 0.2500000 0.75000000

[263,] 0.2500000 0.75000000

[264,] 1.0000000 0.00000000

[265,] 0.2500000 0.75000000

[266,] 0.5000000 0.500000001.7 모형 평가

Caution! 모형 평가를 위해 Test Dataset에 대한 예측 class/확률 이 필요하며, 함수 predict()를 이용하여 생성한다.

# 예측 class 생성

knn.pred <- predict(knn.model,

newdata = titanic.ted.Imp[,-1], # Test Dataset including Only 예측 변수

type = "class") # 예측 class 생성

knn.pred %>%

as_tibble# A tibble: 266 × 1

value

<fct>

1 yes

2 no

3 no

4 no

5 no

6 no

7 yes

8 no

9 no

10 yes

# ℹ 256 more rows1.7.1 ConfusionMatrix

CM <- caret::confusionMatrix(knn.pred, titanic.ted.Imp$Survived,

positive = "yes") # confusionMatrix(예측 class, 실제 class, positive = "관심 class")

CMConfusion Matrix and Statistics

Reference

Prediction no yes

no 148 28

yes 16 74

Accuracy : 0.8346

95% CI : (0.7844, 0.8772)

No Information Rate : 0.6165

P-Value [Acc > NIR] : 7.118e-15

Kappa : 0.6422

Mcnemar's Test P-Value : 0.09725

Sensitivity : 0.7255

Specificity : 0.9024

Pos Pred Value : 0.8222

Neg Pred Value : 0.8409

Prevalence : 0.3835

Detection Rate : 0.2782

Detection Prevalence : 0.3383

Balanced Accuracy : 0.8140

'Positive' Class : yes

1.7.2 ROC 곡선

# 예측 확률 생성

test.knn.prob <- predict(knn.model,

newdata = titanic.ted.Imp[,-1], # Test Dataset including Only 예측 변수

type = "prob") # 예측 확률 생성

test.knn.prob %>%

as_tibble# A tibble: 266 × 2

no yes

<dbl> <dbl>

1 0 1

2 0.75 0.25

3 0.75 0.25

4 0.5 0.5

5 0.75 0.25

6 1 0

7 0.25 0.75

8 1 0

9 1 0

10 0 1

# ℹ 256 more rowstest.knn.prob <- test.knn.prob[,2] # "Survived = yes"에 대한 예측 확률

ac <- titanic.ted.Imp$Survived # Test Dataset의 실제 class

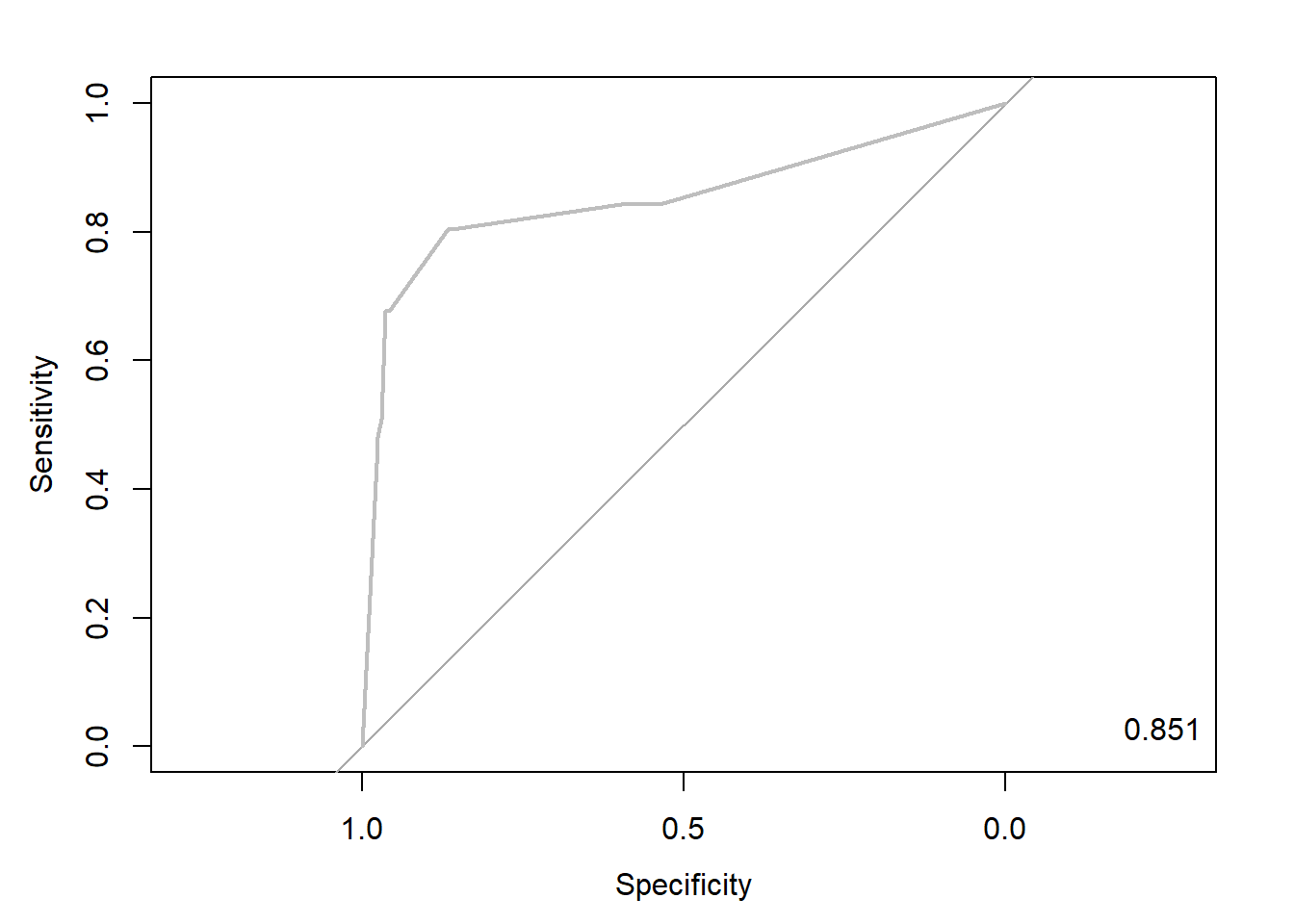

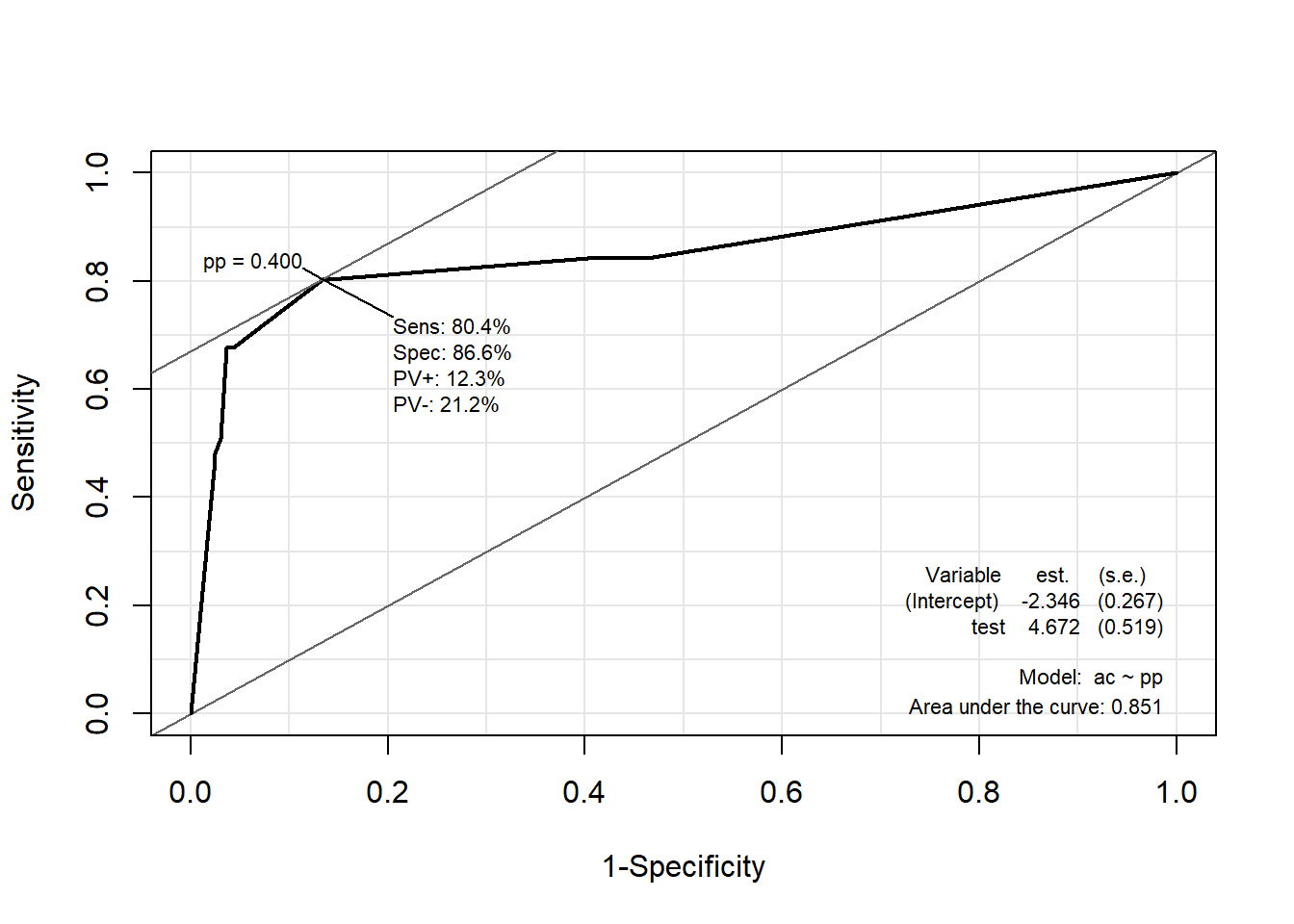

pp <- as.numeric(test.knn.prob) # 예측 확률을 수치형으로 변환1.7.2.1 Package “pROC”

pacman::p_load("pROC")

knn.roc <- roc(ac, pp, plot=T, col="gray") # roc(실제 class, 예측 확률)

auc <- round(auc(knn.roc),3)

legend("bottomright", legend = auc, bty = "n")

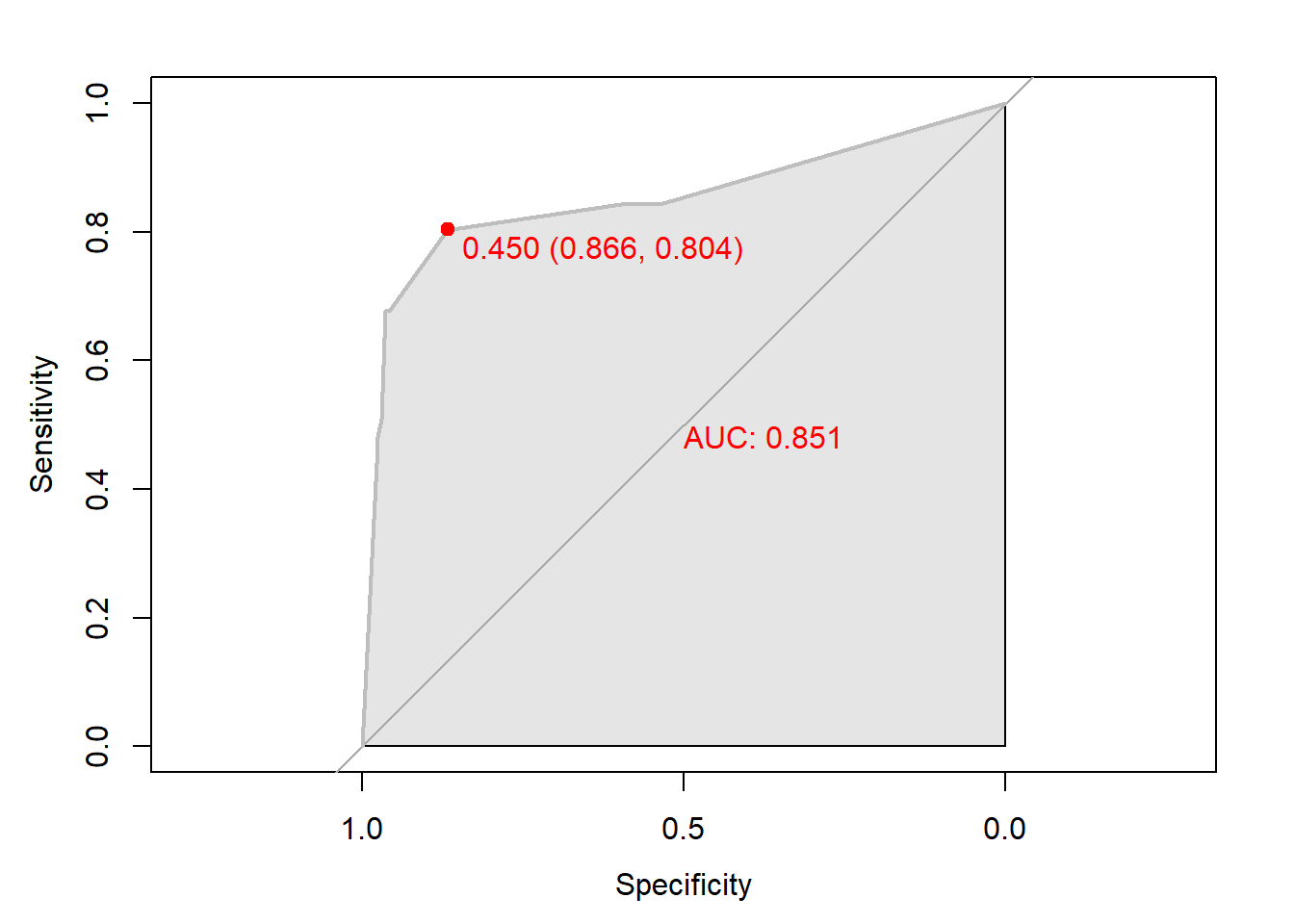

Caution! Package "pROC"를 통해 출력한 ROC 곡선은 다양한 함수를 이용해서 그래프를 수정할 수 있다.

# 함수 plot.roc() 이용

plot.roc(knn.roc,

col="gray", # Line Color

print.auc = TRUE, # AUC 출력 여부

print.auc.col = "red", # AUC 글씨 색깔

print.thres = TRUE, # Cutoff Value 출력 여부

print.thres.pch = 19, # Cutoff Value를 표시하는 도형 모양

print.thres.col = "red", # Cutoff Value를 표시하는 도형의 색깔

auc.polygon = TRUE, # 곡선 아래 면적에 대한 여부

auc.polygon.col = "gray90") # 곡선 아래 면적의 색깔

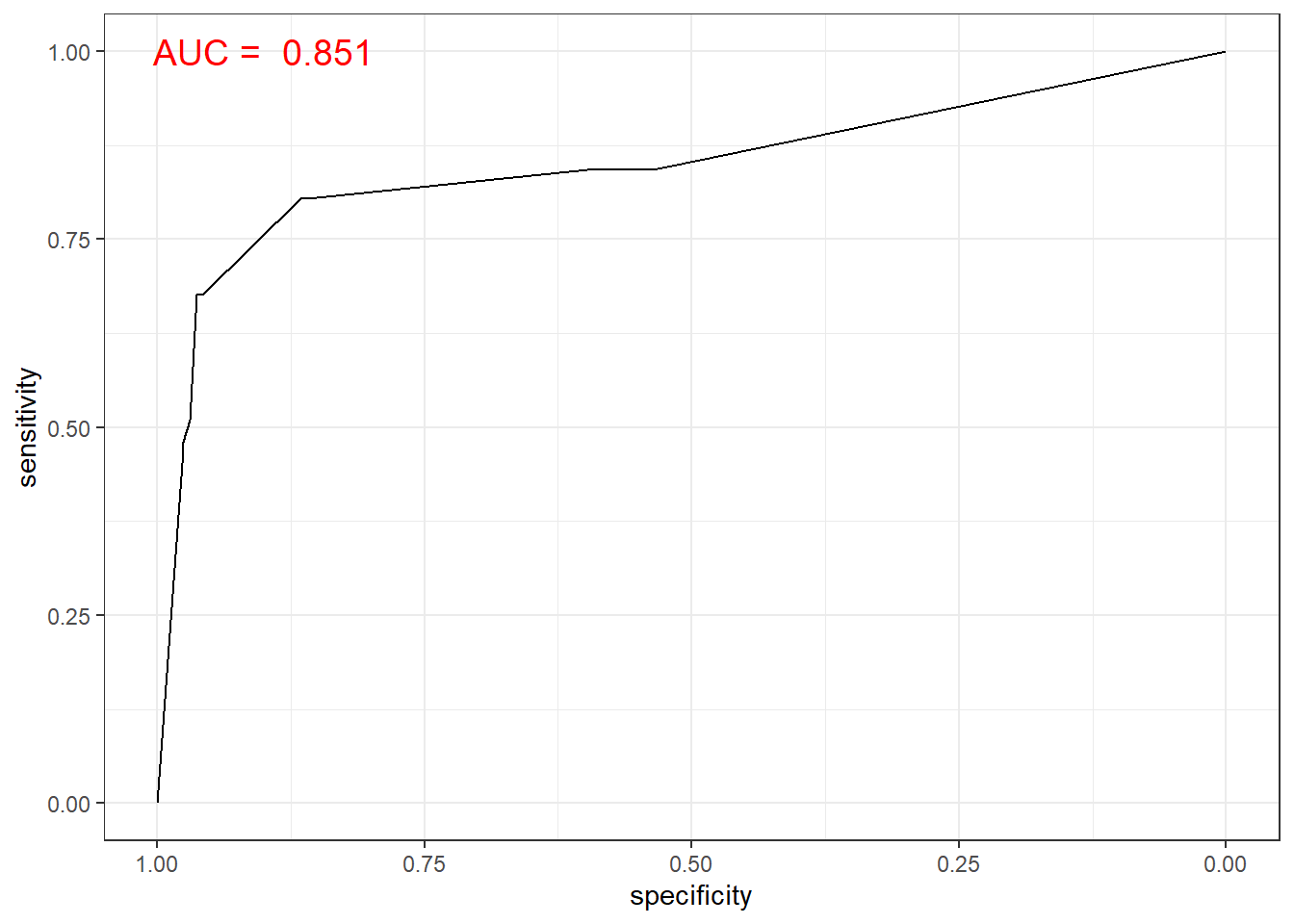

# 함수 ggroc() 이용

ggroc(knn.roc) +

annotate(geom = "text", x = 0.9, y = 1.0,

label = paste("AUC = ", auc),

size = 5,

color="red") +

theme_bw()

1.7.2.2 Package “Epi”

pacman::p_load("Epi")

# install_version("etm", version = "1.1", repos = "http://cran.us.r-project.org")

ROC(pp, ac, plot="ROC") # ROC(예측 확률, 실제 class)

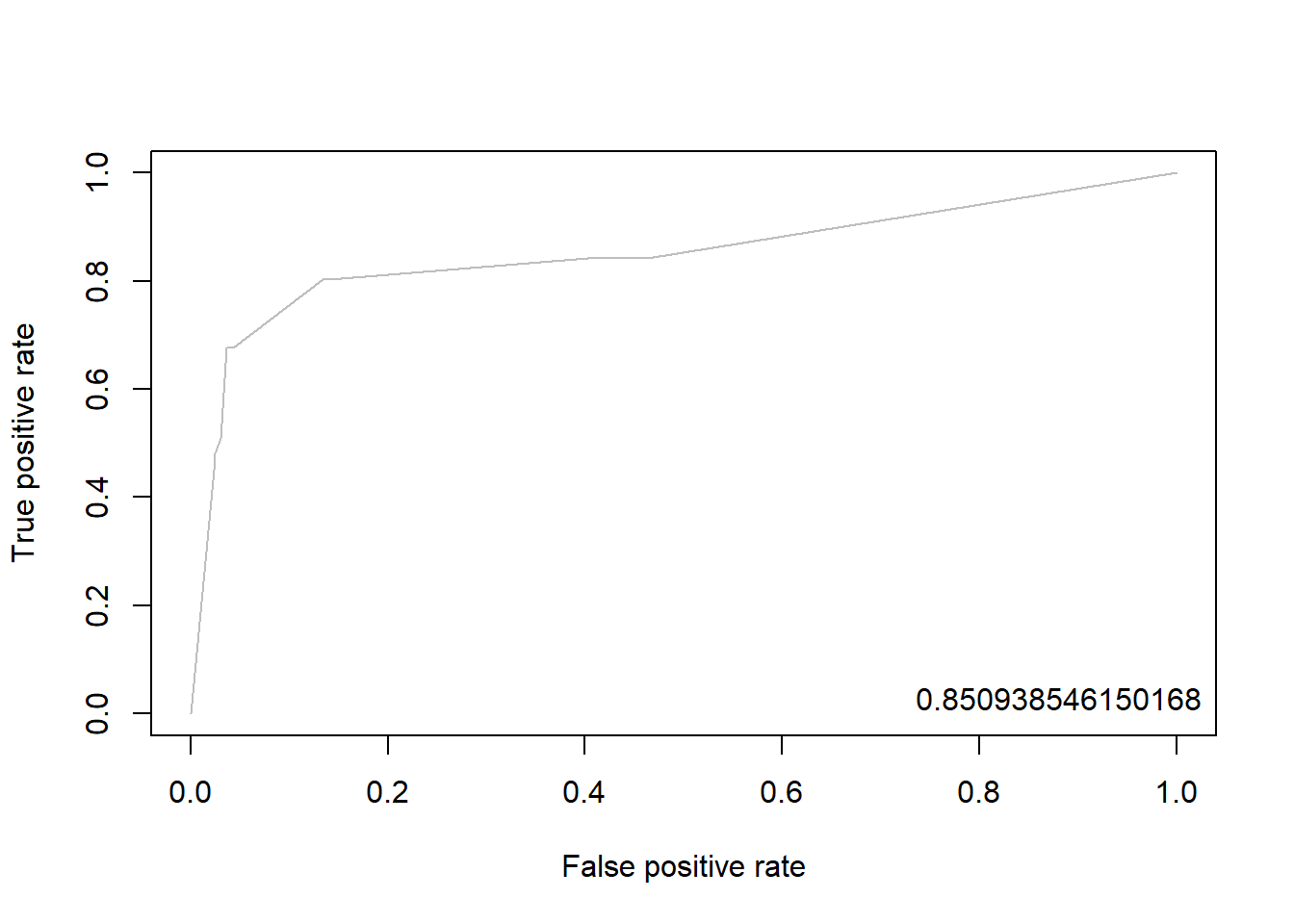

1.7.2.3 Package “ROCR”

pacman::p_load("ROCR")

knn.pred <- prediction(pp, ac) # prediction(예측 확률, 실제 class)

knn.perf <- performance(knn.pred, "tpr", "fpr") # performance(, "민감도", "1-특이도")

plot(knn.perf, col = "gray") # ROC Curve

perf.auc <- performance(knn.pred, "auc") # AUC

auc <- attributes(perf.auc)$y.values

legend("bottomright", legend = auc, bty = "n")

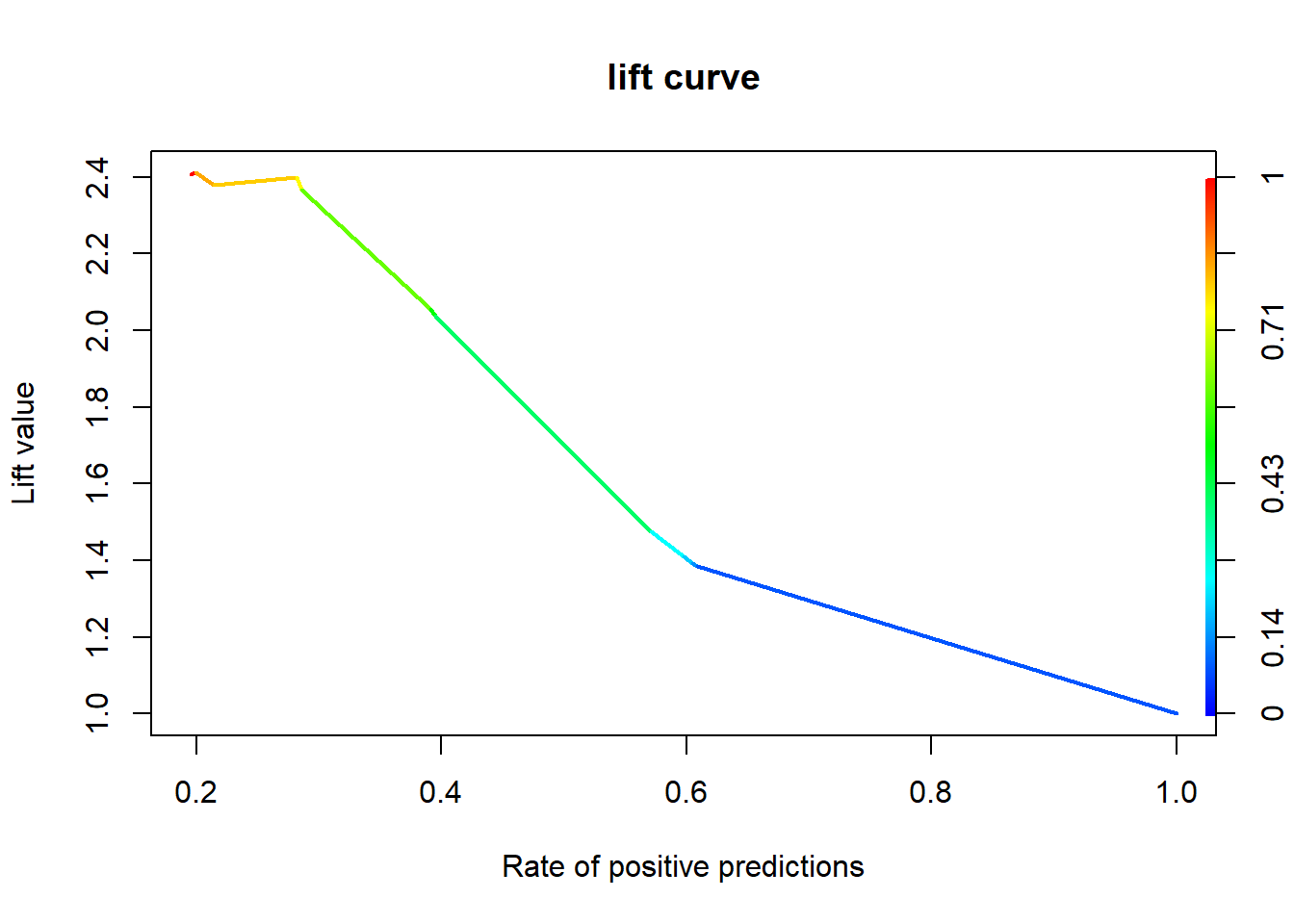

1.7.3 향상 차트

1.7.3.1 Package “ROCR”

knn.perf <- performance(knn.pred, "lift", "rpp") # Lift Chart

plot(knn.perf, main = "lift curve",

colorize = T, # Coloring according to cutoff

lwd = 2)